Apart from equality, comparison, and hashing, are there any *other* operations where it's important to have some language mechanism to avoid mixing up data structures built with different implementations of the operation?

(Mechanisms such as type class canonicity a.k.a. trait coherence, type-level tags, generative functors, ...)

Basically, I'm wondering how much of the low-hanging fruit would be picked by special-casing just these operations specifically.

On the off chance you're not already aware and that it helps: do you know you can write

import Control.Monad

instance Monad M where ...

instance Functor M where fmap = liftM

instance Applicative M where pure = return; liftA2 = liftM2

?

Nervous System is a strange business. We are a computational design and digital fabrication studio known for things like a no-assembly 3D printed dress or designing 3D printed organs.

However neither of those things actually make any money. Monetarily we are primarily a jigsaw puzzle company. How did that happen?

So here’s a thread about jigsaw puzzles.

Reminds me of this story where automotive engineers had to fight designers to get headlights that illuminated the road. They were actually losing the fight until Consumer Reports started testing headlights for function, which created enough of a stink that engineers were able to push for headlights that actually work over headlights that follow modern design principles: https://danluu.com/why-benchmark/

And sure, #notalldesigners, but this is a problem that goes back decades across multiple industries.

For although light oftenest behaves as a wave, it can be looked on as a mote, the "lightbit". A mote of stuff can behave not only as a chunk, but as a wave. Down among the unclefts, things do not happen in steady flowings, but in leaps between bestandings that are forbidden. The knowledge-hunt of this is called "lump beholding".

Nor are stuff and work unakin! Rather, they are groundwise the same, and one can be shifted into the other. The kinship between them is that work is like unto weight manifolded by the fourside of the haste of light.

......

Imagine particle physics explained by modern-day Vikings! This is from an essay written by Poul Anderson. It's in Anglish, a variant of English with the Greek and Romance language influences removed.

Thanks to @dznz for pointing this out.

https://www.ling.upenn.edu/~beatrice/110/docs/uncleftish_beholding.html

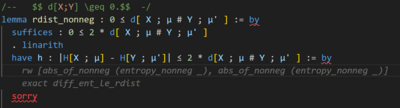

I've written a blog post at https://terrytao.wordpress.com/2023/11/18/formalizing-the-proof-of-pfr-in-lean4-using-blueprint-a-short-tour/ giving a "quick tour" of the #Lean4 + Blueprint combination used to formalize the proof of the PFR conjecture that I recently completed with Tim Gowers, Ben Green, and Freddie Manners.

I wrote all of this for a reporter. I want to share it here. I don't have the energy for alt text, sorry, and the copy-paste mechanism on Twitter DMs on my phone is broken. Maybe I'll manage on my computer later. This is part one of two, really spilling my soul in ink on my feelings about this past month and a half. I don't know how much will make it into the article; probably not much.

I wrote a little thing about destination-driven code generation because I'm nervous to try and write a full register allocator from scratch

Turning and turning in the widening gyre

Horse breaks free mid-air, forcing

Cargo plane's return to New York.

Things fall apart; the centre cannot hold;

Horse becomes untethered in flight and pilot says,

'We cannot get the horse back secured.'

@pigworker I hadn't contemplated that *that* Data.Void proposal might've been *the* Haskell-side origin (having assumed it to be more ancient); looking back at that discussion though, there is a message from Lennart that it was, in fact, already in 1.4: https://mail.haskell.org/pipermail/libraries/2006-October/005991.html

And checking just now, it seems to have also been in 1.3, but not, seemingly, 1.2. So, that's at least one question answered.

@pigworker I've been wanting to track down for some time where "void" as a type name originated and what its original meaning was (and whether a later language borrowed it from an earlier one and changed it); if you have any leads they would be much appreciated. Apparently you reckon C had it first?

the way I feel about nix after using it for 10 months (mostly baffled, 80% of my experience feels like random things happening that I have 0 insight into) is giving me some empathy for how people must feel about git

My very brief summary of Apple's new "dynamic caching" as described in this video: https://developer.apple.com/videos/play/tech-talks/111375

There's a shared pool of on-chip memory on the shader core that can be dynamically split up to serve as register file, tile cache, shared memory, general buffer L1, or stack. Since it's dynamic even within the lifetime of a thread/wave, the registers can be allocated dynamically as the program needs them rather than needing registers to be statically-allocated up-front.

This is a nice overview of Maranget's algorithm for compiling pattern matching: https://compiler.club/compiling-pattern-matching/

@dpiponi That's my recollection as well

@dpiponi Pondering "so what's a type of which only a single value can exist at a time?", thought of: State# RealWorld. Hmm.

It just dawned on me that the fanciful nonsense I wrote here ages ago

http://blog.sigfpe.com/2006/09/infinitesimal-types.html

makes some kind of sense now that Haskell has linear types

...that's definitely one of the more cursed things I've ever seen in C++. Apparently the __PRETTY_FUNCTION__ macro is expanded *after* template instantiation.

https://discuss.systems/@tobinbaker/111389666037676146

Background: this is motivated by compile-time 32-bit type ID extraction for template types. Here's the best I have so far (relies on GCC __PRETTY_FUNCTION__ macro also supported in clang):

template<typename T>

consteval uint32_t get_type_id()

{

const char* type_name = __PRETTY_FUNCTION__;

return murmur3_32(type_name);

}

Then I use this function to initialize a constinit static member for the template type ID.