It’s important to distinguish between a kind of classical utilitarianism which assumes some sort of objective moral utility function which determines what is right, and the utilitarianism which just says that one way to model moral action is to define a utility function over a set of things you care about such that the higher the function the better, and ask how actions impact that function.

I believe the second is true and useful, unlike the first.

Moreover, during the training or evolution of a superintelligence, Omohundro drives would likely not only emerge but become intrinsically valued (à la mesa-optimizers), and override the original goal.

Notice that in nature, every terminal goal has always come about as a proxy for an Omohundro drive.

I just understood the argument [against the orthogonality hypothesis](https://web.archive.org/web/20200701082447/https://www.xenosystems.net/against-orthogonality/). I’m not completely sold, but I’m interested.

It’s not entirely wrong; a superintelligent paperclip maximizer could exist. But terminal goals are not in practice independent from intelligence, because an agent pursuing Omohundro drives for their own sake may be able to self-improve more efficiently than a paperclip maximizer.

yeah, it's definitely very bad to try to pass off AI generated content as your own. That's lying.

Even if you acknowledge that an LLM made a text or image, there are good and bad ways to share that. You usually don't want the reader to begin without knowing how the text was made.

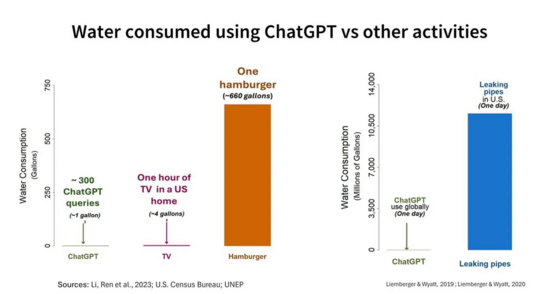

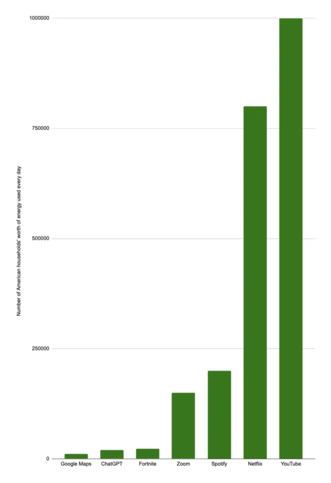

Maybe I'm wrong here but what I've been told is that energy consumption from modern AI is next to nothing compared to things like streaming videos or making video calls.

Unless you don't think that's true (in which case please tell me why), or you never stream videos or make video calls, you're the pot calling the kettle black.

Perhaps - but the prompt wasn't conceived as a text to be published, it was conceived as an image prompt.

That's all from me on this topic for now. Thanks for engaging.

I'm saying it's not a series of choices. It's one idea and one choice. Kind of like the one idea and choice that might lead to a single prompt? So maybe one prompt can be art?

Look I understand this completely and it's a valid reason artists might choose not to use AI tools. But surely you see that the same is true with photography - the image is a lot less expression and a lot more of something else.

Putting a Ghibli filer on a photo isn't that expressive, I agree. There are other reasons someone might want to do it, like fun. Or, it emphasizes certain aspects of the photo that weren't emphasized before, to be charitable.

Not so for the more general case, especially when someone is carefully and repeatedly prompting a model.

Also, there's lots of human expression that isn't a series of choices. Artists have put urinals in museum galleries and improv performance art.

I fully agree there's no quality bar to making art. And you can turn that the other way to apply to AI - even if it's low quality, it's still a valid form of expression!

If you're angry that AI image generation might stop people from becoming 'actual' artists, maybe get angry at camera technology for stopping photographers from becoming painters?

This is one more step in the same direction.

Sure, everyone is an artist. What I mean when I say non-artist is people who don't usually spend lots of time making art, who haven't put effort into developing a skill that helps them express themselves artistically

And I'm not saying low effort AI generated pictures are inherently better than basic scribbles in every sense, I'm saying that they open different creative outlets

- Personal Website

- https://satchlj.com